Mastering Nano Banana: A Research-Based Guide to Google’s Next-Gen Prompting

In the rapidly evolving landscape of generative AI, Google has introduced a significant shift in visual creation workflows with its latest models, popularly known under the codename 'Nano Banana.' This suite, which includes the high-velocity Nano Banana (Gemini 2.5 Flash Image) and the high-precision Nano Banana Pro (Gemini 3 Pro Image), represents a move toward 'semantic' rather than 'pixel-based' interaction. Unlike earlier models that relied on dense 'tag soups' of keywords, Nano Banana is designed to interpret natural language as a production brief, allowing creators to act as directors rather than prompt engineers.

The Architecture of an Effective Nano Banana Prompt

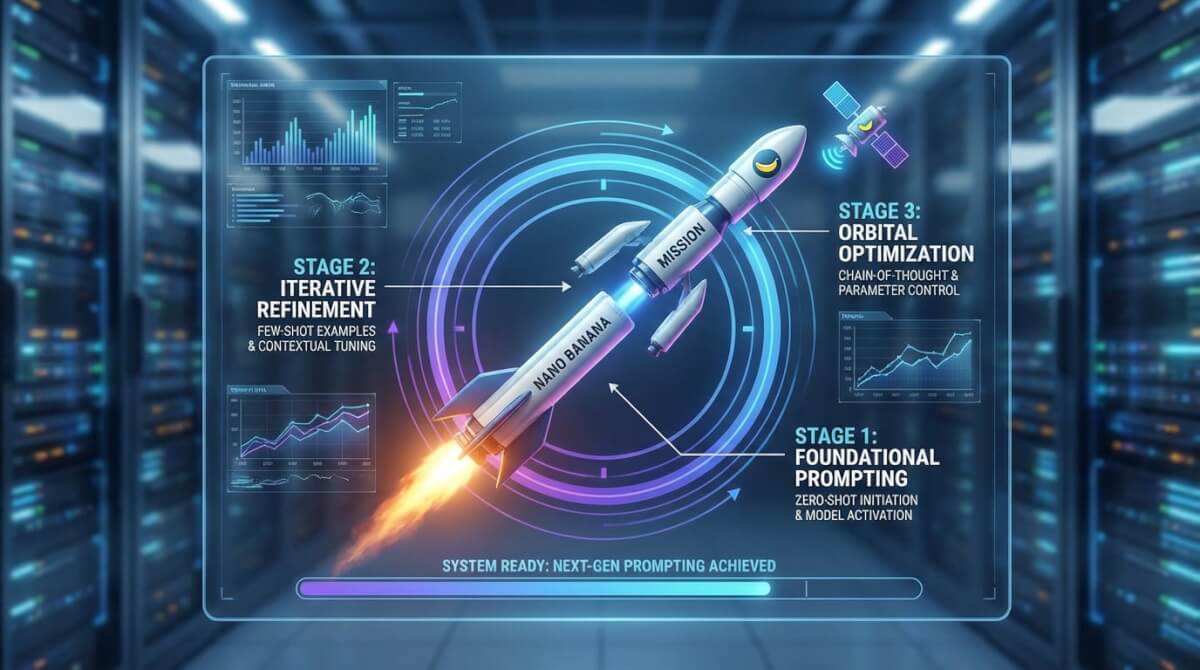

To achieve professional-grade results with Nano Banana, research suggests moving away from fragmented descriptions toward a structured narrative. The model thrives on a five-pillar framework: Subject, Composition, Action, Location, and Style. Because the Pro version incorporates 'Thinking' layers—interim processing steps that resolve logic and physics before rendering—it can handle complex spatial relationships that once required manual masking.

For example, instead of prompting 'dog, park, sunset,' a researcher-optimized prompt would read: 'A low-angle shot of a golden retriever mid-sprint through a sun-drenched meadow at golden hour. The lighting should create a warm backlight effect on the fur, with a shallow depth of field (f/1.8) blurring the lush green background.'

Beyond Generation: Semantic Editing and Style Transfers

One of the most disruptive features of the Nano Banana ecosystem is its ability to perform 'semantic masking.' In traditional workflows, changing a specific element in an image required manual 'in-painting.' Nano Banana allows for conversational edits. By providing an original image and a simple natural language command like 'Replace the sky with a dramatic neon-lit thunderstorm,' the model identifies the sky's boundaries automatically, adjusting the environmental lighting on the ground to match the new sky.

This capability extends to 'Identity Locking' and character consistency. By utilizing the model's support for up to 14 reference images, users can maintain a single character's facial features across multiple scenes. Prompting for consistency requires explicit referencing, such as: 'Using the facial structure from Image 1, place this character in a noir-style detective office, leaning over a mahogany desk illuminated by a single desk lamp.'

Leveraging Real-World Knowledge and Search Grounding

A unique differentiator for Nano Banana Pro is its integration with Google Search grounding. This allows the model to generate visuals that are factually informed. If a user prompts for an infographic about a specific historical event or a technical diagram of a SpaceX Starship, the model can pull real-time data to ensure text rendering and structural components are accurate.

- Text Rendering: Unlike predecessors that struggled with spelling, Nano Banana Pro treats text as a primary semantic layer, allowing for legible, localized posters and mockups.

- Multilingual Support: Prompts can specify text in dozens of languages, ensuring that branding remains consistent across global markets.

- Diagrammatic Reasoning: The model can 'solve' visual problems, such as taking a handwritten sketch and turning it into a professional vector-style diagram.

Prompt: "A professional infographic showing the stages of a rocket launch with perfectly rendered, legible technical labels."

Best Practices for High-Resolution and 4K Outputs

For developers and enterprise users, maximizing the output quality of Nano Banana involves fine-tuning technical parameters within the prompt. While the model handles much of the complexity, specifying camera lens details (e.g., '35mm anamorphic lens') and cinematic color grading (e.g., 'muted teal and orange palette') provides the necessary constraints for 4K rendering. Furthermore, iteration is key; since Nano Banana is built on a conversational foundation, it is often more effective to refine an image through follow-up prompts ('Make the metallic textures more reflective') than to restart the generation from scratch.

As AI image generation moves toward this 'Thinking' model, the value shifts from knowing the 'right keywords' to understanding the principles of visual storytelling and composition. Nano Banana stands at the forefront of this transition, bridging the gap between raw machine learning and intuitive creative direction.