The Mechanics of Neural Graphics: How DLSS Frame Generation Redefines Rendering

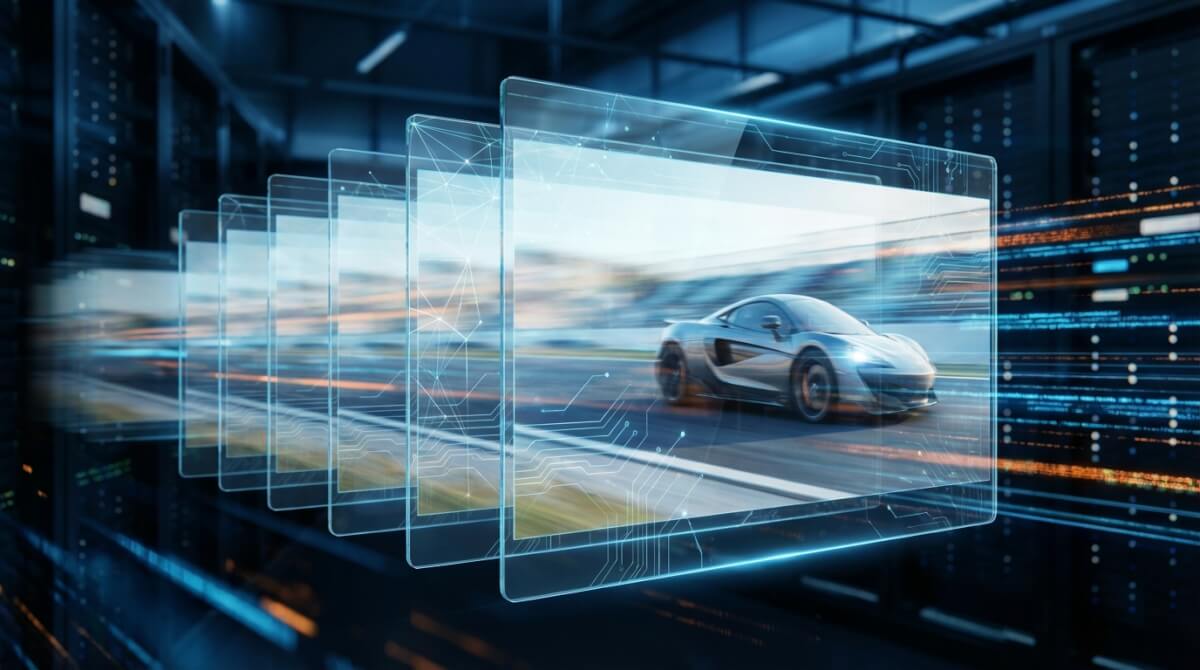

In the traditional graphics pipeline, every pixel displayed on a screen is the result of a rigorous calculation involving geometry, lighting, and textures. However, as the industry pushes toward path-traced realism and high-refresh-rate 4K displays, "brute force" rendering has reached a point of diminishing returns. To overcome these hardware limitations, NVIDIA introduced Deep Learning Super Sampling (DLSS) Frame Generation. Rather than rendering more pixels, this technology uses generative AI to create entire frames from scratch, effectively decoupling frame rates from the constraints of the CPU and traditional GPU rasterization.

The Architecture of Interpolation

Frame Generation, first introduced with the DLSS 3 suite for the Ada Lovelace architecture, operates through a sophisticated convolutional autoencoder. This neural network does not simply duplicate existing frames; it creates a mathematically plausible intermediate image that sits between two traditionally rendered frames. To achieve this, the system requires four primary data inputs: the current game frame, the prior game frame, an optical flow field, and game engine motion vectors.

The synergy between these inputs is critical for visual fidelity. While game engine motion vectors track the movement of geometric objects across the screen, they often lack data for non-geometric elements such as particles, reflections, and dynamic lighting. This is where the dedicated Optical Flow Accelerator (OFA) comes into play. The OFA analyzes pixel-level changes between sequential frames to capture the "flow" of light and shadows, allowing the AI to predict how a reflection on a moving car or the flicker of a fire should appear in the generated frame.

Overcoming the Latency Barrier

A significant technical challenge of generating frames is the inherent delay it introduces. Because the AI must wait for the next traditionally rendered frame to complete before it can interpolate the intermediate one, a natural lag in input responsiveness would occur. To mitigate this, NVIDIA integrates its Reflex technology into the DLSS pipeline. Reflex synchronizes the CPU and GPU to minimize the render queue, effectively reducing system latency to a level comparable to, or even lower than, native rendering without DLSS. This allows the user to experience the visual smoothness of 120 FPS even if the underlying game engine is only processing 60 FPS.

The Shift to Transformers and Multi-Frame Generation

The latest research-oriented developments in neural graphics have seen a shift from Convolutional Neural Networks (CNNs) to transformer-based architectures. With the announcement of DLSS 4 and the Blackwell (RTX 50-series) architecture, NVIDIA has introduced "Multi Frame Generation." While previous iterations could only insert one frame between rendered ones, this new model can generate up to three additional frames, potentially multiplying performance by up to 8x compared to native rendering.

The move to transformers is significant because these models are better equipped to handle long-range spatial and temporal relationships. This results in superior temporal stability—reducing the "ghosting" and shimmering artifacts that occasionally plagued earlier versions of AI frame interpolation. By using FP8 compute capabilities found in newer hardware, these models can process larger datasets with greater efficiency, allowing the AI to handle more complex scenarios like path-traced lighting with fewer visual errors.

Impact on Game Development

As AI-generated frames become more indistinguishable from rendered ones, the philosophy of game design is shifting. Developers can now target higher visual tiers—such as full path tracing—that were previously considered unplayable in real-time. Key benefits of this transition include:

- CPU Bottleneck Relief: Since generated frames do not require the CPU to calculate game logic or draw calls, they can boost performance in simulation-heavy titles.

- Scalability: The ability to scale performance across different resolutions and hardware generations using the same AI models.

- Energy Efficiency: Generating frames through specialized AI cores often consumes less power than rendering them through traditional CUDA cores.

As neural rendering matures, the boundary between "real" and "generated" graphics continues to blur. The transition from simple upscaling to multi-frame generation signifies a future where AI does not just assist the rendering process, but actively constructs the majority of what the eye sees on screen.